Deploying advanced analytics for predictions

Advanced analytics in the pharma industry is a problem-solving journey through historical, current and predicted data, with insights driving an improved decision-making process.

Efforts typically begin with a monitoring focus, concentrating on the most recent operations data available, with a goal of quickly identifying and surfacing issues. As an organization’s analytics efforts increase, it often starts to leverage historical advanced analytics as well, diving into the terabytes of data stored in historians or other databases to fine-tune insights. Using a process often referred to as diagnostic or root cause analytics, subject matter experts (SMEs) can explore recent issues, such as a deviation during the previous batch, or long-term issues, such as the frequency of failures of agitator bearings over the past decade.

With historical advanced analytics, the goal is leveraging stored data to inform process changes that can be made to improve operations. These changes may be focused on preventative maintenance or mitigation strategies, each to reduce process deviations or equipment failure. Other goals may include optimization initiatives, such as a change to process setpoints to match historical batches that produced the highest quality or yield.

Leveraging historical data shifts an organization from simply monitoring and reacting to issues as they arise to using data and context to better inform process improvement decisions. While historical advanced analytics are useful in finding and eliminating common issues, this type of process improvement is still inherently reactive in nature, meaning that an issue must occur in the past to be investigated and prevented moving forward.

Fortunately, there is a way to anticipate some problems before they occur, enabling the implementation of proactive solutions.

Back to the future

For certain asset or process failures it may be possible to completely avoid the issue in the future by, for example, adding a cleaning step to remove contamination. But in many situations, the failure may be due to equipment performance degradation or other foreseeable causes, making it critical to shift the historical analysis into predictive analytics.

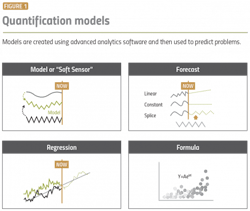

Predictive analytics represents a type of advanced analytics that leverages historical data for building and training a model to create a projection of expected future data (Figure 1). Common use cases of future data in manufacturing applications are equipment-based predictions, also known as predictive maintenance, where the goal is to anticipate when equipment requires maintenance or will fail.

Other common use cases include process-based predictions, where the goal is to optimize the process by modeling product quality, optimizing process setpoints or acting as a soft sensor for parameters not easily measured. In equipment and process scenarios, historical data for the equipment or process is analyzed to train an algorithm to project future data based on a set of constraints and assumptions.

Categorization versus quantification

Predictive maintenance techniques often differ based on the type of equipment and its typical failure mode or event, but the purpose of the predictions remains constant: to increase quality, reliability and uptime.

Predictive model creation starts with determining the best technique, either categorization or quantification. Categorization models use classification and clustering algorithms to assign a category to each section of the data, which often corresponds to distinct operating modes.

When using a categorization technique for predictive maintenance, data is usually assigned to normal or abnormal operation categories, where abnormal operation indicates a potential imminent failure. While categorization models can be useful in specific applications, quantification models are generally preferred because they provide enhanced granularity related to equipment health.

For example, instead of an algorithm sounding an alert to indicate equipment performance has gone awry and failure is imminent, a quantification model can provide the current rate of equipment degradation to inform the time frame for required maintenance. With quantification models, regression algorithms train or fit a numerical equation or model to relevant historical data. These models can then be utilized to predict future data, from which insights can be created.

Quantifying with first principles

Quantitative predictive maintenance models fit an equation to an available data set. The input data set usually includes a time variable, which counts the runtime from the previous maintenance event, along with one or more process parameters. Process parameters may be raw tags from a data historian or calculated tags created using advanced analytics, such as a calculated principal component representing several input variables.

The preference is to develop models using first principles equations corresponding to equipment performance. These first principles calculations can correspond to a wide array of phenomena including pressure drops, internal friction, or thermal efficiency based on established laws, principles and theorems. With this method, data can be dimensionally reduced and fit to the appropriate regression algorithm, while minimizing the amount of training data required.

Once the model is trained on historical data, it then needs to be forecasted into the future, requiring all inputs to the model to be forecasted. Several forecasting options are available. For continuous processes it is common to forecast that the future value will likely be the same as the average value from the previous day or week. For batch or transient processes, the modeling process is more complex and may include regression calculations or average daily or batch profiles.

Once all the inputs to the model have been forecasted, the outputs of the model can be used to determine the projected maintenance period by alerting when a critical value on the model forecast has been reached. The critical value for maintenance may be defined by subject matter expertise, vendor specifications, through an analysis of historical failures or issues, or a combination of these efforts.

Predictive maintenance case studies

One example of using first principles equations for predictive maintenance in the pharma industry is to detect fouling or degradation of heat exchangers and filtration membranes. Heat exchangers are used on the process contact side as jacketed tanks and condensers, as well as on the utility side for equipment such as steam generators or cooling towers.

While the process contact side of the exchanger may be cleaned and inspected regularly, utility applications are often run for extended periods of time. Although typically considered to be clean services, they may contain impurities or sediment due to the buildup of scaling or fouling over time.

Heat exchanger efficiency and performance is quantified using the heat transfer coefficient based on the First Law of Thermodynamics. This coefficient is derived from the flow rates, temperatures and physical constants such as the exchanger surface area. Use of the heat transfer coefficient effectively reduces the dimensions of the model from six tags (four temperatures and two flow rates) to a single performance parameter. This technique has been extremely successful when models are paired with data cleansing techniques that focus on areas of steady state flow.

Similarly, filtration membranes, which are found throughout the process and utility sides of pharma plants, foul and degrade over time. Filtration membranes can be simplified to a membrane resistance value using Darcy’s Law, which considers flow rates and pressure readings on each side of the membrane, along with constants such as the filter area and fluid viscosity. After reducing to a single performance metric, the trend between maintenance cycles can be modeled as a function of time and other process parameters.

Most models only require linear regression, but more advanced techniques may be required. For example, polynomial or exponential regressions may be incorporated based on subject matter expertise regarding the failure mechanism or the empirical fit of the historical data. Outputs of the statistical analysis, such as p-values, help identify the parameters having minimal impact on the predictive model, which can then be removed to reduce complexity and noise. Finally, the regression model can be forecasted or extrapolated into the future based on expectations of the inputs in the future, and it can create alerts when the model output reaches a critical value.

Figures 2 and 3 show predictive maintenance models built using an advanced analytics application, for heat exchangers and filter membranes, respectively. Heat exchanger operation is typically a continuous process and was therefore assumed to have a range of process conditions, so a low and high estimate were forecasted. Filter membrane operation is typically a batch process, so the model forecasted the same frequency of batches as in the past to create insights.

Process-based predictions

Process-based prediction models, also known as predictive quality models, follow a similar approach as predictive maintenance models, but the goal is changed from modeling equipment performance to predicting a critical quality attribute (CQA), such as potency or yield.

While first principles formulas may be available for certain applications such as reaction kinetics, process-based predictions tend to focus on empirical models derived from design of experiments or numerous production runs. Benefits of process-based predictions can be achieved in both batch and continuous operations by identifying optimal process conditions, reducing cycle times and providing real-time insight into the process — all without waiting for sample results.

Reactive to proactive

Many pharmaceutical processes are run on a reactive basis, with plant personnel dealing with issues as they occur. A better approach is to proactively predict problems before they occur as this provides a number of benefits including lower maintenance costs, increased uptime and improved quality.