While the definition of quality is unique for every situation, the spirit of ensuring it is universal, as is the desire to ensure a safe and effective production process. Whether responsible for a clean water process, the overall productivity of a lab or pursuing continuous improvement/manufacturing excellence — an effective data analytics solution provides a lasting foundation.

In a pharmaceutical environment, having enough data is typically not the issue. Having the right data at the right time is, as is the ability to have the best tools on hand to quickly investigate key quality metrics. It can also be a challenge to prioritize the following requirements when implementing an advanced analytics solution:

- Easy access to old and new data sources without requiring significant IT investment

- Simplified analysis and visualization tools to be used by the majority of the scientists and engineers

- Flexibility to support a spectrum of model development needs without requiring IT resources

- Centralized access to results by teams along with long-term knowledge management

- A user-friendly utility for real-time action using boundary management and alarm notifications

The advancement of user-friendly advanced analytics software, along with growing computational power and inexpensive data storage, has provided options to meet each of these needs.

In this article, we review several considerations for implementing an advanced analytics solution to provide the appropriate level of control over quality metrics. Case studies will provide real-world examples of how an advanced analytics solution can be successfully implemented by providing:

- Data source integration to drive quality in batch processing,

- Flexibility to support a spectrum of model development needs without requiring IT resources, and

- Centralized team access to results for short and long-term knowledge management.

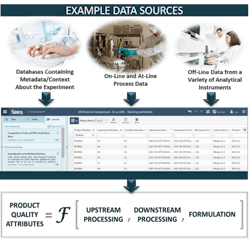

The right solution will deliver what is needed to assemble, cleanse, search, visualize, contextualize, investigate and share insights from disparate data sources (Exhibit 1).

Exhibit 1

Approach: Quality Tied to the Process

Beginning with strong stakeholder engagement, an assessment of a company’s needs will quickly identify the gaps in the current data analytics environment that are making quality control overly challenging. A solution may then be implemented to provide flexibility to those trying to understand the correlations required to predict and control quality. In other words, innovative advanced analytics combined with data-focused technologies is crucial to transforming process and analytical data from sensors and instrument systems into useful information and actionable intelligence.

Actionable intelligence, as related to quality metrics, must be generated to deliver value to the organization. The following case studies illustrate how to use advanced analytics software to:

- Assess a process to gain an understanding of cause and effect relationships critical for maintaining quality,

- Analyze and then optimize a process step for consistent operation, and

- Receive early warning that a process may be moving away from desired parameters, while there is still time to assess and react in a meaningful way.

Using advanced analytics software to execute these steps results in faster, smarter insights that improve execution, drive down costs and increase earnings. It is also a better approach than traditional reliance on spreadsheets — which typically requires time-consuming, manual gymnastics to transform data into whatever format is required to link with useful tools like multi-variate analysis programs, modeling applications and visualization software.

In contrast, an advanced analytics solution is a better approach because it provides easy access to disparate data sets, while streamlining the connectivity to these other tools. With the right solution, it will be simple to add new data sources, and to customize the views of the data to efficiently extract the desired information — as will be shown in the following examples.

Exhibit 2

Case Study Examples

Implementing an Advanced Analytics Strategy to Optimize Quality in the Batch Production of a Biotherapeutic or Active Pharmaceutical Ingredient

Effective control over the production of a biotherapeutic or active pharmaceutical ingredient (API) is critical for achieving target product quality attributes. However, batch-to-batch variability during the upstream process can have profound impacts on the efficacy of the final product, with direct process and cost implications for downstream partners.

In this first example, the data analytics strategy discussed above was applied to optimize the quality of a batch operation for both a chemically defined API and a biotherapeutic (Exhibit 2). For the API example, both the cooling step and the filtration processes were analyzed for consistency, and the process was tuned for optimized crystal properties.

Since key product quality attributes affecting downstream processing and product performance — such as purity, particle size distribution, polymorphic form and crystal habit — are defined by how a process is run, it is important to correlate these metrics with process conditions.

Seeq, an advanced analytics solution, was used to couple on-line process data, off-line analytical data and batch context data to rapidly identify opportunities for process improvement. This yielded tighter control of the anti-solvent addition step and an optimized filtration process, resulting in higher yield and purity, more consistent batches, optimized powder properties, reduced cycle times and lower cost of goods.

For the biologic example producing a biotherapeutic, bioreactor batches were compared across multiple equipment scales (100L and 3L) to develop an effective scale-down model. In this example, correlations were developed among metabolites, oxygen concentrations, viable cell density and protein titer — ultimately enabling robust scale-up to meet key clinical timelines. Cost savings were realized by providing users with enough time to reconfigure the process to meet clinical timeline, which saved the cost of a clinical batch.

Implementing an Advanced Analytics Strategy to Monitor and Maintain Consistent Water Quality

With both upstream or downstream processing in pharma or biotech, ensuring consistent water quality requires utilization of PAT to provide on-line particle count and bioburden monitoring in real time. Also required is access to current and historical data from the water system and other disparate data sources. With the data sources accessible and tools available to analyze it, this strategy provides a good foundation for process understanding.

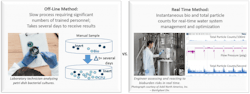

For this water system quality example, calculating biologic particle counts in real-time was the goal, which would reduce the use of the existing off-line standard method of measuring Colony Forming Units (CFUs), a multi-day process (Exhibit 3). With current off-line CFU measurements, it was not possible to tie in the important quality metric, total particle and bio particle counts, into the current process as it was occurring, which meant there was no way to correlate bioburden with other metrics.

Exhibit 3

By gathering real-time particle and biologic count data, and analyzing it with Seeq, real-time measurements were presented visually, allowing users to:

- Observe transient changes impacting performance with insight on a per-second basis,

- Trend totalized particle and microbial counts over time,

- Identify repeating patterns impacting water loop performance, opening the door to insights about how particle counts are related to overall water system operation,

- Provide handles for process monitoring with boundary management and alarm notifications.

With the large amount of data signals available, users also wanted a way to be notified when a process was moving away from defined parameters. This would provide them with an opportunity to react before losses occurred, as opposed to doing a post mortem on a contaminated run. As part of this advanced analytics solution, users needed to use historical data to define operating boundaries and alarm limits, and to create notifications.

Using an advanced analytics solution, the water system is now tested for biological and total particle counts in real time, and then analyzed in concert with process data about the system and the external environment. A system containing real-time bio and total particle counts from an IMD-W system, coupled with a data historian system, gives users the power to:

- Leverage all the best data sources: Connect IMD-W, data historian and MES data sources together for access by a data analytics program,

- Analyze using historical context: Perform calculations using ability to look at historical data,

- Develop understanding: Develop models connecting the water system operation with particle count data to define cause and effect relationships,

- Create structure: Define operating boundaries and management logic,

- Implement, review and improve: Define an alarm strategy and read outputs back into data historian system alarm notification software.

To enable real-time notifications, boundaries of normal operation required definition, along with logic for how and when to be notified. Starting with the simplest rule, a small number of scenarios were added, and then a work process was developed.

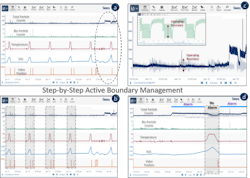

With this strategy developed, iteration is now key for refining boundary definitions where it matters (i.e. level of importance related to quality), then repairing false positives and negatives as they occur. This immediate workflow is essential and allows the user to modify the boundary to more accurately reflect the desired control of that quality metric, with results depicted in Exhibit 4.

Exhibit 4

Empowering Focus

An effective advanced analytics solution provides a lasting foundation essential to long-term success in quality management and metrics. Giving scientists, engineers and managers the tools to extract and analyze only the data needed is imperative — as is enabling data focus.

As demonstrated in the case studies provided, a flexible, self-service advanced analytics strategy enables a robust method of selecting the data needed for analysis and decision-making for both process scale-up and real-time process monitoring.

These are key factors in meeting clinical timelines. In other words, well-defined process boundaries and monitoring reduce mistakes and improve process quality and ultimately product quality. Through the implementation of these solutions to combine disparate data sources and make the analytics process user-friendly, it is much easier to realize effective work processes to support workflow execution through analysis and reporting; optimized use of time and key resources to avoid unnecessary experimentation; and knowledge creation and management to streamline cross-group access to existing data and share results.

The key to achieving quality is empowering internal resources by giving them the right advanced analytics solutions for their specific needs, and empowering them to focus on just the data of interest.

Acknowledgements: The author wishes to thank Brian Crandall and Brian Parsonnet at Seeq; and Dr. Allison Scott, Aric Meares and Mary Parsons from Azbil- BioVigilant Division.

[javascriptSnippet]