Alleviating bottlenecks in the product life cycle

In the last few years, supply chain disruptions within the pharma industry have highlighted the need for high-performance, distributed manufacturing that can accelerate the delivery of new products to patients and increase manufacturers’ profitability. These disruptions, coupled with recent legislation, have provided economic pressures that will require modifications to how pharma manufacturers develop and price their products.

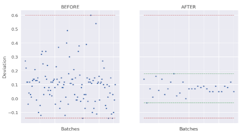

The existing workflows and tools, many of which are still manual or paper-based, are unable to support the pace of pharmaceutical product development, the number of products under development at any time, and increased use of biologics and cell and gene therapies. Process development (PD) capacity has become a bottleneck and practitioners are under severe pressure to adapt. In manufacturing, tech transfer, scale-ups and general capacity creation/optimization are also highly inefficient. Deviation investigations and improvements still take several weeks (sometimes months), further restricting the industry’s ability to adapt to the new economic environment.

The adoption of AI and data-driven techniques make PD much more efficient by capturing molecule and CMC knowledge algorithmically for tech transfer and scale up. As a result, process and operational qualifications then become more efficient — in some cases reducing the number of engineering and qualification runs by 50% or more. AI can also automate deviation and variability investigations and can also be used for autonomous control with predictable titers and quality and support real-time release. Data and models generated by advanced AI-based PD tools help PD scientists effectively transition from manual processes to digital, which in turn, remedies the current bottlenecks that impact teams across the industry.

AI implementation challenges

One of the biggest challenges of implementing AI in manufacturing is the availability of ready-to-use, contextualized data both for posterior analysis, real-time analysis and predictive operations with AI. The challenges of data availability are pervasive across the industry — even pharma companies that have heavily invested in data science and analytics teams struggle to address it. As a result, these teams report spending three to four times more time in data preparation than in building the models. Additionally, AI models are typically built to work on streaming (real-time) data, but are tested using clean offline historical data — giving the user a false promise of high accuracy — and thus perform poorly when deployed in the streaming environment.

A second challenge is the availability of large data sets. Most machine learning and AI algorithms have their origins in big data. Pharma manufacturing generally lacks large amounts of valuable, usable data, with many still relying heavily on paper, and this problem is even more pronounced in PD.

A third challenge is model deployment pipeline and integration (often referred to as MLOps). Once accurate AI models have been built, they need to be deployed on a reliable pipeline that can be monitored for performance and versioning, and integrated with process control and quality systems.

Purpose-built solutions

The ideal manner in which to address these challenges is with a complete and coherent, end-to-end solution for the entire pharmaceutical product life cycle. Instead of an AI/machine learning workbench, the best solution is one that provides data connectivity, contextualization, MLOps, and a suite of applications that use AI for PD, MVDA Batch Analytics, deviation trend prediction, PAT soft sensors, batch evolution profiling and prediction, process optimization, and even autonomous operation of bioreactors. This approach increases adoption and reduces time-to-value by removing the need for heavy investment in teams of data scientists, years of R&D, and large amounts of historical data. Additionally, this approach aids regulator acceptance because the solution can be validated based on ‘intended use’ rather than focusing on AI.

Most pharma manufacturing is still batch-based, so as a result, the ideal is a purpose-built solution that includes the data framework, as well as the AI analytics, specifically for batch manufacturing. General IIOT platforms do not inherently support this, and the lack of this capability is a big blocker for deployment. Most operational data platforms, both legacy systems and new open cloud platforms, miss the context of materials and product which limits their usability for holistic analytics. A specialized product and process context module allows the users to define and capture this context and combine it with the traditional ISA-88 standards context.

While there is a lot of focus on manufacturing, the gap (of workflows and language) between PD and manufacturing is a major barrier to gaining value from digitalization. First, the language — during PD, equipment, sensors, and tags, ISA88 batch context like recipes and procedures have little relevance. The PD scientist is speaking in terms of critical process parameters, critical quality attributes, and critical material attributes, which in manufacturing will become part of process control, equipment, and variables. By capturing both in a unified workflow, one of the biggest challenges in knowledge management and tech transfer is addressed, as are the struggles in implementing QbD. It also allows the molecule sponsor to track product performance and quality with a unified foundation when using CDMOs.

With a complete contextual data pipeline for use in streaming and batch AI models, mundane data access and preparation tasks are eliminated, and the analytics teams are able to focus on model building; driving productivity and boosting efficiency of those teams. With an machine learning pipeline that is tightly integrated with the incoming data, the solution supports models built using its own machine learning or open machine learning frameworks, and integrates the output of the models to OT systems, visualization systems, process control systems and quality management systems. The built models can be tested on streaming data to ensure they will perform as expected.The necessity of explainability

Model explainability is the ability of a model to transparently explain itself and its output in a way that the average human can understand. In regulated industries like pharma, acceptance, adoption, and compliance require a high degree of explainability not available in most open AI frameworks. A solution that includes explainability offers evidence-based support of validation, and it offers an added benefit of prediction/hypothesis testing for PD scientists.

Often people assume that AI cannot be used in a regulated environment or is hard to validate because it is a ‘black box.’ The reality is that validating an application, or a process using AI, is no different than the validation processes used for traditional methods. Of course, regulators want manufacturers to use the proper validation protocols required in GxP applications. The problem is that when these applications are built using ad-hoc, ‘one-off’ models and workbenches, validation is difficult. A better path is a product and platform centric approach with CFR21/11 and GxP compliance at every step of the data and model building, testing and deployment process. Well-documented validation protocols allow the user to easily deploy the system in a regulated process. Access control and audit trails built into the platform also ensure this compliance.

Regulatory-compliant advanced technologies that help adapt to the changing landscape and supply chain variability exist today, and they can help lower the cost to develop and manufacture. This means higher quality life-altering therapies get to patients sooner, with more of them available. Use of more advanced digital capabilities across the product life cycle of pharmaceutical manufacturing is essential for maintaining competitiveness in the industry.