Quality by Design (QbD) is a strategic concept for operational excellence in any manufacturing operation, and it has become a focused initiative by many of the life science companies that are highly regulated (i.e. pharmaceutical and biotech companies). The goal is to link manufacturing intelligence to product and process research and development (R&D) data in order to streamline the operational changes required to produce a quality product at the lowest manufacturing costs reliably and consistently in a variable environment.

The key challenge is developing the contextual link from routine production/manufacturing data sets to the vast array of experimental data obtained during the product and process development and pilot lifecycle stages. By correlating critical process parameters (CPPs) in the plant with the critical quality attributes (CQAs) and relating the variations to the R&D development data sets, a production facility can define a platform for real-time operational excellence. In addition, the manufacturing intelligence can add a significant knowledge base to the R&D cycles on new products in development. The ultimate benefits are shorter R&D cycle times, improved technology transfer to pilot and manufacturing and significantly reduced operational costs in commercial production. Minor shifts in CPPs can, in as close to real-time as needed, be adjusted to assure CQAs are in line with specifications, saving time and rework loops that add to costs. This is true operational excellence.

The devil, of course, is in the data. Capturing, cataloging and operating on the R&D and QC data become a critical-path requirement for effective QbD. Historically this data resides in numerous data silos and is difficult, if not impossible, to access and use. A single data capture and management platform is needed for R&D, pilot, QC/QA and manufacturing to effectively implement a QbD strategy. This then creates the environment to turn your data assets into information that when interpreted forms the knowledge that can create the wisdom for QbD and operational excellence.

The 2.0 regulatory environment

Recent regulatory initiatives have highlighted the need for science-based process understanding and a culture of continuous improvement in life science manufacturing. The FDA and other regulatory agencies are encouraging the industry to adopt technologies to move from a “quality by inspection” to a “quality by design” operation, with the goal of continuous quality verification (CQV) and ultimately “real-time release.” Although QbD and process analytical technology (PAT) improvements are not yet regulatory requirements, the original cGMP’s specify that “…control procedures shall be established to monitor the output and to validate the performance of those manufacturing processes that may be responsible for causing variability in the characteristics of in-process material and the drug product.” Furthermore, rather than being something that happens only during process development and scale-up, there is now an expectation that continuous improvement and the establishment of process consistency are ongoing components of the entire product lifecycle.

Successful approaches to “real-time quality assurance” require that the sources of variability in the CQA be identified and understood so that they can be measured and controlled in real-time using appropriate technologies and equipment. The process parameters that drive this variability are called Critical Process Parameters (CPP). When combined with a culture of continuous improvement and the right supporting technology environment, this initiative can drive adoption of better practices and sustain higher levels of predictability and quality compliance across the entire manufacturing value chain. The resulting business benefits to life science manufacturers are significant:

•Increased predictability of manufacturing output and quality

•Reduced batch failure, final product testing and release costs

•Reduced operating costs from fewer deviations and investigations

•Reduced raw material, WIP and finished product inventory costs

•Greater understanding, control and flexibility within the supply chain

•Faster tech transfer between development, pilot and manufacturing

•Faster regulatory approval of new products and process changes

•Fewer and shorter regulatory inspections of manufacturing sites

These benefits accelerate time to revenue, reduce cost of goods sold (COGS) and address the new regulatory environment in an industry that is feeling pressure from expiring patents and less predictable product pipelines. They also translate into significantly reduced risks and costs to consumers.

QbD Informatics - Data Capture, Contextualization, Access and Knowledge Creation

Adopting informatics to support QbD is not a single event but a series of events that constitute a planned journey from tactical and reactive science to strategic, information-driven science. The journey begins with the transition from a paper-based environment to digital with the required departmental standardization needs for harmonized data exchange across the enterprise. Through this process data can be effectively found and mined for QbD modeling. Modern informatics helps organizations better understand and describe the variables affecting the CQAs and ultimately optimize processes to achieve product and operational goals. This is the essence of QbD.

Over the last decade, large investments have been made in IT infrastructure in an attempt to improve life science manufacturing performance. This began with implementations of Distributed Control Systems (DCS) and Supervisory Control and Data Acquisition (SCADA) systems to measure, record, control and report on individual equipment skids, unit operations and plants. This has been followed by implementations of Laboratory Information Management Systems (LIMS), Enterprise Resource Planning (ERP), Manufacturing Execution Systems (MES), and Electronic Batch Record (EBR) and Electronic Notebook systems (ELN). These systems today represent “silos” of data and rarely utilized for process and quality improvements on the scale needed to affect a QbD plan. The key to any “systems approach” is the ability to contextualize the “data-information-knowledge” transitions and use this data for operational excellence in R&D, pilot and manufacturing operations. The devil, of course, is in the data and what is needed is a scientifically aware informatics lifecycle management platform to capture, catalog, contextualize and define an informatics process for knowledge creation to affect improvements across the product development to commercialization continuum.

Integrated Product Development and Manufacturing

Today, if we look at the journey of a product from lab to plant, we see that research informatics systems already deliver some level of custom electronic environments enabling scientists to document, explore and protect intellectual property. Likewise, manufacturing informatics is heavily reliant on ERP, LIMS, MES and other disparate silo-based systems to test quality into the product/production process. Rarely are these systems effectively linked other than for some trending reports. Development on the other hand has undertaken less informatics investment and is encumbered with many paper-based processes and data management practices. Through an effective “platform data management strategy,” information capture throughout the development to pilot plant to production plant processes will provide the foundation for long-term QbD operation (see figure 1).

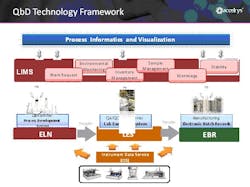

The technology elements to facilitate this “platform strategy” are comprised of the following components (see figure 2):

•R&D – a process and analytical electronic laboratory notebook (ELN)

•Pilot and Manufacturing (QC) – a Laboratory Execution system (LES) and Electronic Batch Records (EBR)

•Pilot, QC and Manufacturing – a next-generation Laboratory Information Management system (LIMS 2.0)

•IT/IS – a Process Informatics and Visualization system

•Instrumentation in all Groups – an Instrument Data Service (IDS) for real-time data capture and transfer

Figure 2: A QbD informatics framework consists of an ELN, LES, EBR, IDS and a Process Informatics and Visualization technologies

Through the effective integration of these technologies, the platform for capturing, mining, analyzing and correlating development, process and quality data sets can be realized.

For QbD to work, organizations must be able to capture all the data and transition the raw data into information that when analyzed can create the knowledge and wisdom to build quality into the process for next-generation operational excellence. The devil is indeed in the data.

About the Author

John P. Helfrich

Vice President

Sign up for our eNewsletters

Get the latest news and updates