The advent of process analytical technologies (PAT), as advanced by the U.S. Food and Drug Administration (FDA), has opened up new opportunities for the life sciences industry. Pharmaceutical manufacturers can now feel free to apply more of the systems engineering tools that are used so widely in other industries, such as data analysis, reduction, monitoring and control, to batch process manufacturing.

The goal of these systems is generally to achieve better and consistent yields, to minimize batch-to-batch variation, and to receive early warnings of the potential failure, contamination or unacceptability of an evolving batch. Biological fermentation processes are ripe for the application of such tools.

Biofermentation generates very valuable specialty products, typically in low volume, and follows a proprietary manufacturing recipe, established a priori. Its manufacturing costs are significantly high, and the fermentation process itself is fraught with risks, including the risk of contamination by foreign microorganisms and of lower product yields due to suboptimal operation or deviations from prescribed behavior after process disturbances.

Batch-to-batch variations are also commonly seen, due to uncertain initial conditions such as quality of inocula. Therefore, there is a strong incentive to develop and deploy advanced monitoring and fault diagnosis strategies for bioprocesses. An early detection of “events” such as faults or sub-optimal behavior, can lead to corrective action, when possible, to alleviate the fault or to shut down the batch to prevent depletion of expensive feed material.

Assessing The Health of An Evolving Batch

The task of event or fault detection and diagnosis, which assesses the health of an evolving batch at line, broadly involves the following tasks:

- Extraction of all information related to the evolving batch in terms of initial conditions and all measurement records up to the current time, and

- Consolidation and comparison of this information with a template that characterizes normal operation to derive meaningful inferences about health.

Challenges of Process Assessment

a) Lack of key measurements Fed batch/batch fermentations pose significant monitoring challenges.

Most importantly, sterility or aseptic requirements for the bioreactor and lack of adequate on-line sensors to measure primary process variables, such as metabolites, microorganism, or nutrient levels, severely restricts the frequency of sampling of the broth on a regular basis. Only a few infrequent and irregular measurements of primary culture states such as biomass and nutrient levels are available. This restriction influences the monitoring strategy. Secondary process variables can be measured using inferential estimation strategies. Such strategies inferbased on such measurements including carbon dioxide evolution rate (CER), oxygen uptake rate (OUR), acid/base addition rates, pH and temperature. These measurements can be used to infer the state and health of the evolving batch.

b) Limited understanding of the process parameter relationships

The second important limitation to bioprocess monitoring arises from a lack of detailed process knowledge about the fermentation itself. Due to several intermediate reactions, a detailed mechanistic process model is almost impossible to develop. For this reason, model-based event detection and fault diagnosis strategies, which are relatively popular in the chemical process industries, are difficult to implement in biopharmaceutical manufacturing.

Approaches Towards Batch Process Monitoring

Monitoring and assessing the health of biopharmaceutical batch processes has been tackled broadly in three ways:

Adaptive state estimators: Such estimators rely on the use of the best available understanding of the process via a mathematical model, and also exploit measurements available from the batch, to generate inferences of some of the key batch parameters.

These estimators are designed to adapt to the time varying characteristics of the process, for example to the changes in growth and metabolite expression rates when the nutrient levels are depleted.

They require a careful choice of measurements that can be used in conjunction with the process model and need to be carefully tuned to achieve accurate reconstruction of the critical batch fermentation parameters and states.

Artificial intelligence based algorithms: The second approach to the development of monitoring and fault diagnosis strategies is based on the use of expert systems and artificial intelligence based algorithms. Such approaches rely on the construction of a qualitative and quantitative database of regular and faulty modes of plant operation.

The batch operation at line is then classifed as regular or faulty using a pattern classifer. Heuristicsbased expert systems rely on capturing knowledge from plant personnel and take into account the different fault occurrences and process variable interactions that the plant personnel can envision, by capturing them a rule base.

Statistical model-based classification: In these approaches, statistical models are developed using batch data collected during the routine normal operation of these batches. For monitoring an evolving batch, the data from the batch is compared with the template of “normal” conditions established in the statistical model and diagnosed for process upsets and sensor failures.

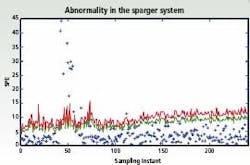

Figure 1: Multivariate control charts depicting abnormalities in

the sparging system.

All these approaches work well for bioprocess monitoring because they obviate the need for understanding the process at a fundamental level and for using intrusive sensors that can potentially contaminate the fermentation broth. A number of industry tools can routinely collect and archive plant data from batch manufacturing processes. This archived data usually contains a wealth of information related to the cause and effect relationships between the fermentation parameters, which can be analyzed and fruitfully exploited to develop an improved understanding of the process.

To analyze this information, multivariate statistical tools such as Principal Components Analysis (PCA) and other data reduction algorithms, can be used to visualize the parameter relationships. Multivariate statistical tools make it much easier to flag abnormal behavior during the evolution of the batch, for example if there is contamination from foreign microorganisms or reduced aeration due to changes in agitation power. The figure (left) shows an abnormality in the sparger system that occurred at around the 50th sampling instant and was detected on the monitoring charts. This is seen in terms of the violation of the control limits (shown in red and blue, respectively, for two different statistical criteria) at the 50th instant; subsequently when this abnormality is corrected around the 70th sampling instant, the fermentor operation is classifed as normal as characterized by the points returning back within the control limits.

Potential root causes in terms of the fermentor states / parameters, that likely contribute to the observed ‘event’ are also highlighted to aid in early initiation of remedial measures to enhance the batch quality. Individual methods can also be combined. For example, the artificial intelligence-based approaches can be used to resolve some of the quantitative interpretations obtained from multivariate statistical methods. Grey box models that exploit the structural knowledge of the cause-effect relationships have been used together with data-based statistical methods to evolve improved monitoring procedures.

Migrating paradigms that also include monitoring batch performance and qualification in addition to just batch health can also be explored. Early event detection in batch biopharmaceutical manufacturing is important if productivity and yield are to be improved. Broad methodologies are available to help in this effort.

At this point, the statistical model-based approach has relatively easier requirements that can be met in manufacturing practice and therefore offers good potential for deployment.