A Framework for Technology Transfer to Satisfy the Requirements of the New Process Validation Guidance: Part 2

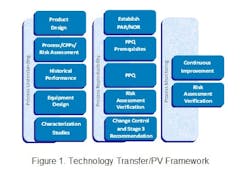

In the life of any drug product, the technology transfer of a process is a complex matter, made more complicated by the new definition of the Process Validation (PV) guidance issued by FDA in January 2011. In Part 1 of this series we have attempted to lay out a practical approach to successful transfer citing a real life example. We discussed the activities required to identify and establish an effective Proven Acceptable Range (PAR) and Normal Operating Range (NOR) for a legacy product as defined by the technology transfer framework used for this project. The framework is based upon Pharmatech Associates’ Process Validation (PV) model shown below in Figure 1.

- Facility and Utility qualification

- Equipment qualification (IQ,OQ and PQ or equivalent)

- Analytical Method Validation is complete and Measurement System Analysis (MSA) has concluded that the resolution of the method is appropriate

- Cleaning Validation protocol; Cleaning method development and validation

- Upstream processing validation such as Gamma irradiation of components if applicable, etc, are complete for the new batch size

- Environmental Monitoring program is in place for the new facility

- Master Batch Record

- In-process testing equipment is qualified, MSA complete and acceptable, method validated and SOP in place

In a technology transfer exercise these elements must be applied to the new equipment and include the larger commercial batch size consideration. If all the elements are not complete prior to beginning the PPQ runs then a strategy may be developed, with the participation of QA, to allow concurrent processing of the PPQ lot and process prerequisites. For example, if cleaning validation has not been completed prior to the PPQ runs, and the PPQ lots are intended for commercial release, then a risk-based approach to the cleaning validation may be adopted with studies conducted concurrently with the manufacture of the lots with the caveat that the lots are not releasable until the cleaning validation program is complete. If such an approach is adopted then consideration must be given to both the major clean procedure, typically performed on equipment when changing products, and the minor clean procedure, typically performed during a product campaign.

In our case study process, all prerequisites were complete with the exception of cleaning validation which was conducted concurrently. The new process site used a matrix approach to cleaning validation, bracketing its products based upon an assessment of the API/Formulation solubility, potency, LD50 and difficulty-to-clean profiles. For the purposes of the PPQ runs, only the major clean procedure was used between lots since the minor clean procedure had not been qualified.

PPQ Lots

To establish a PPQ plan that is efficient in demonstrating process reproducibility the considerations for sampling testing and establishing acceptance criteria must be thoughtfully considered, especially for products with limited development- or performance data.

PPQ Objectives

To cite the PV guidance, the objective of the Process Performance Qualification is to “confirm the process design and demonstrate that the commercial manufacturing process performs as expected.” The PPQ must “establish scientific evidence that the process is reproducible and will deliver quality products consistently.” We take key points from this objective in turn to establish acceptance criteria as in the following examples:

- Process performs as expected: commercial performance is inferred from process knowledge gained during the Process Design stage;

- Process is reproducible; process is under statistical control and is, therefore, predictable;

- Process delivers quality products consistently: process is statistically capable of producing product that meets specifications (and in-process limits) and will continue to do so.

It is usually not necessary to operate process parameters at the extremes of the NOR since this should have been previously established. As such, the set points of process parameters are not changed between PPQ lots and do not impact the number of PPQ lots required. In determining the number of lots consideration should be given to understanding the source and impact of variation on quality attributes. Suggested sources of variation to consider are:

- Number of raw material lots. In particular, when a critical material attribute is identified;

- Number of commercial scale lots previously produced during Process Design;

- Number of equipment trains intended for use with commercial production;

- Complexity of process and number of intermediate process steps;

- History of performance of commercial scale equipment on similar products;

- Number of drug strengths;

- Variation of lot size within commercial equipment;

- In-process hold times between process steps;

- Number of intermediate lots and mixing for downstream processes.

It is recommended to perform a risk analysis of these sources of variability. The number of PPQ lots can then be determined by matrix design of the sources with the highest risk to variation of quality attributes. Those sources of variability, which cannot be included in the PPQ, should be considered for monitoring during Stage 3 - Continuous Process Verification.

After completing the PPQ analysis, the team revisited the risk matrix to reflect the commercial operation. This data was included in the Stage 2 final report.

Stage 3: Process Monitoring

The last stage of the new PV lifecycle is process monitoring. While monitoring has been part of the normal drug quality management system (QMS), the new PV guidance advocates moving beyond the normal CQAs reported in a product’s Annual Product Review (APR) and extending them to include the CPPs that have been identified as critical to process stability.

For the product in question, a protocol was drafted to gather data over the next 20 lots to establish alert- and action limits relating to process variability. This data was intended to be reported as part of the product scorecard and included in the APR. One key consideration or expectation of Stage 3 of the PV model put forth in the guidance is the ability to make adjustments to the process without having to revalidate the process. The underlying premise behind this assumption is that there is sufficient process understanding from Stages 1 and 2 to predict the impact of the change on product performance. Updating the risk model as new and greater understanding becomes available will allow the previous understanding to be considered when contemplating a process adjustment in the future.

Conclusion

Transferring a legacy process that has limited development and characterization data requires a clear data gathering and analysis strategy to be able to meet the requirements of the new PV guidance. The prerequisites to PPQ that would be routine as part of a new drug development exercise must be considered before moving the product to the new process train to ensure the key expectations of the quality management system are addressed for any potential commercial material manufactured as part of the PPQ runs.

There is no single solution to establish the final PPQ design, sampling and acceptance criteria. The framework applied for this technology transfer process provided a practical methodology for considering the key elements required to control the sources of variability that can affect process reproducibility commercially while meeting the primary elements of the new process validation guidance.

General References

1. Guidance for Industry-Process Validation: General Principles and Practices, January 2010, Rev. 1.

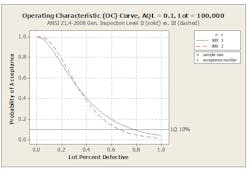

2. ANSI/ASQ Z1.4-2008, "Sampling Procedures and Tables for Inspection by Attributes."

3. Kenneth Stephens, The Handbook of Applied Acceptance Sampling Plans, Procedures and Principles, ISBN 0-87389-475-8, ASQ, 2001.

4. G.E.P Box, W.G. Hunter, and J.S. Hunter, Statistics for Experimenters, ISBN 0-471-09315-7, Wiley Interscience Series, 1978.

5. Douglas C. Montgomery, Design and Analysis of Experiments, 5th Ed., ISBN 0-471-31649-0, Wiley & Sons, 2001.

6. Schmidt & Launsby, Understanding Industrial Designed Experiments, 4th Ed., ISBN 1-880156-03-2, Air Academy Press, Colorado Springs, CO, 2000.

7. Donald Wheeler, Understanding Variation: The Key to Managing Chaos, ISBN 0-945320-35-3, SPC Press, Knoxville, TN.

8. W.G. Cochran and G.M. Cox, Experimental Designs, ISBN0-471-16203-5, Wiley and Sons, 1957.

9. Box, G.E.P., Evolutionary Operation: A method for increasing industrial productivity, Applied Statistics 6 (1957) 81-101.

10. Box, G.E.P. and Draper, N.R., Evolutionary Operation: A Statistical Method for Process Improvement, ISBN 0-471-25551-3, Wiley and Sons, 1969.

11. Pramote Cholayudth, Use of the Bergum Method and MS Excel to Determine

The Probability of Passing the USP Content Uniformity Test, Pharmaceutical Technology, September 2004.