There are few industries that have as many regulatory challenges involving how to process and control its products as the life science industry — pharmaceuticals, biotechnology, medical devices and the contract companies that support them. Some companies may be able to remediate their existing legacy Enterprise Resource Planning (ERP) systems to meet compliance mandates; however, most “IT systems” simply will not support new compliance or global business mandates without significant total cost of ownership challenges. This has led many life science organizations to implement new purpose-built, commercially available software or “secondary solutions” and integrate them into existing ERP technologies. This approach, as opposed to costly development of custom-coding ERP software into operational systems, provides a “best-in-breed” holistic IT system for operational excellence on a global scale. Typical “secondary solutions” include Laboratory Information Management Systems (LIMS), Electronic Notebook Systems (ELN), Lab Execution Systems (LES) and/or Electronic Batch Record (EBR) systems for quality and manufacturing operations.

The controlling system with respect to final product manufacturing and release to the marketplace is the ERP system; however, this system must be data-fed by other workflow automation systems to capture, catalog, specify, track/trace and approve data through the entire raw materials to in-process to final product manufacturing process. In fact, this product lifecycle management process starts at the development stage of the formulation, synthesis or bioprocess and analytical methods creation stages and has an increasing importance with respect to downstream Quality by Design (QbD) operational needs of the industry.

Operational Excellence Requires Operational Data

The mantra in the C-suite for life science companies is “Operational Excellence” from all segments of the supply chain, both internal and external. As companies initiate “lean” or “six-sigma” programs and begin the now-popular externalization of processes that previously were performed in-house — from R&D through pilot operations and now into full CMO-based API production and packaging — executive managers are becoming increasingly aware that their information/data management infrastructure requires updating. Past practices of patching custom-coded business practices into years-old existing ERP and LIMS systems are fast becoming a bottleneck with respect to both the time and costs necessary to complete the task and the compliance overload it creates for validating any custom programming effort.

A key strategic element to a successful Operational Excellence effort is capturing and cataloging the experimental and operational data streams as the product transitions from early phase development through pilot and into commercial operations. These data constitute the foundation for true data management transformation to operational wisdom (see Figure 1).Figure 1 – The data management requirements for global operational excellence begin at the early stages of a product’s lifecycle and continue through full commercial operations.

Custom-Coding vs. Purpose-built Solutions

The data feeds for operations and the source for operational excellence programs, generally come from a master ERP system and a LIMS, as well as from a large array of paper-based “systems,” be they in Microsoft Word, Excel, lab notebooks or point-workflow logbooks. The data can be difficult to access and generally does not correlate with any true context of daily workflows. Often there is a lot of manual approvals and manual transcription of data/information into other electronic systems. In the life science industry, this translates into a host of compliance risks for data accuracy and integrity and is often the cause of deviations to cGMP guidance. In fact, the majority of FDA-related 483 observations occur because personnel do not accurately follow written procedures.

Often, IT management initiates a large investment in customizations or configurations of existing systems (ERP, LIMS etc.) in an attempt to automate the data capture processes. Again, these customizations require specialists, often from outside the organization as consultants, to define, custom-code and implement solutions that will automate the workflows and data capturing processes and define the compliance and validation tasks required by cGMP regulations. The bottom line is these customizations are difficult to implement and are often too costly in the long run to maintain.

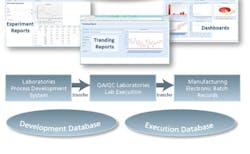

The solution is to seek out purpose-built “secondary” IT systems that are already complete and are installed and validated in a few months versus the year or so needed for customized “solutions.” These secondary solutions include process development ELNs, QC/QA LES, LIMS applications designed for the life science industry, and EBR systems. These product-based solutions versus project-based customizations are the key to short-term success for any operational excellence initiative (see Figure 2).Figure 2 –Product-based IT solutions outline data capture and databases across the process development and execution environment and search tools to access and report information across the continuum. Product and lot releases from ERP are governed by these “secondary IT systems.”

Quality by Design Provides Agility

A recent operational efficiency initiative, endorsed by regulatory agencies, is Quality by Design (QbD). Under the QbD process, an operational “design space” is developed by using the development data to support an operational window, allowing production to modify or adjust process conditions (i.e., temperature, pressure, pH, etc.) to account for variations in raw materials or process conditions that fall within the operating guidelines of the design space. This provides the ability to adjust manufacturing processes, as close to real-time as needed, to bring product-critical quality attributes (CQAs) into alignment without notifying the agency for approvals. This alone provides operational excellence conditions that did not exist a decade ago. The key IT component for QbD is the development data containing cause and effect relationships useful for QbD correlations during plant operations and events.

Critical to the QbD process are the relationships between Critical Process Parameters (CPPs) and Critical Quality Attributes (CQAs) that are developed during the product development stages in process and formulations R&D. The development database and the execution database need specific correlations for manufacturing to operate at 100% efficiency. Developing the IT informatics system to obtain these contextual correlations is key to implementing best practices for operational excellence.

Secondary System's Role

There is growing consensus that new product development is increasingly ineffective — especially for science-driven innovation. Currently, only 25% of projects in industries ranging from pharmaceuticals to aerospace result in the commercialization of new products, according to IDC Manufacturing Insights. Of the 25% of products that make it to market, 66% fail to meet original design or consumer expectations.

For science-driven organizations, there is a productivity gap that spans the entire innovation-to-commercialization cycle. This productivity gap exists because traditional information technology solutions have proved incapable of adequately managing the complexity of scientific data and processes and the volumes of unstructured data characteristic of scientific R&D. Scientific processes, for example, are difficult to automate and track, and therefore information is hard to access and reuse during the scientific and product innovation and commercialization cycles. Critical scientific insights and context from the late discovery and development stages are never transferred to the commercialization operations (i.e., QbD needs) of a business because downstream enterprise software can’t handle the unstructured data generated in the R&D cycle. Key parts of the innovation process are consequently lost, along with actionable insights that could bring novel products to market more quickly and cost effectively.

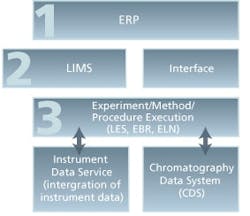

To close the productivity gap and better manage the scientific innovation lifecycle, many life sciences companies are taking a “systems” approach that encompasses a scientifically aware, service-oriented architecture (SOA) for enabling the integration and deployment of broad scientific solutions (versus custom coded “projects”) spanning data management and informatics, enterprise lab management, event modeling and simulation, and workflow automation from lab to plant. Foundational elements are seen in Fig. 3.

The key elements of this tiered approach include ERP, LIMS, an e-Notebook type workflow execution system (for R&D, QC and Production), and an instrument/equipment and CDS interface for full data capture from procedure development through final product production and process QC execution.

Figure 3 – A tiered IT infrastructure to enable SILM strategic operations for global harmonization and standardization leading to true production operational excellence.

Closing the Gap Between Innovation and Commercialization

A critical driver of the implementation of secondary systems is the need for compliant operational excellence. For decades, most data has been paper-based, requiring numerous non-value-added checks to ensure end-to-end data integrity and quality from product development through product commercialization. These paper-based systems combined with local ERP and LIMS systems are even more problematic as life science companies increasingly externalize operations. After all, the lab and production floor is where the real work happens.

Today’s technology can eliminate these paper systems and replace them with efficient electronic environments supporting science to compliance. Within any pharmaceutical company there are three key informatics design issues depending on where scientists or operators are working within the lab-to-plant continuum. Research scientists require an open-ended, free-form ELN for experimental design, results capture and IP protection. QA/QC scientists and process operators in cGMP environments need just the opposite — a highly structured, procedure- and method-centric operation with full instrument integration and data exchange capabilities with other IT systems (LIMS, ERP, MES, etc.). Between discovery R&D and manufacturing QA/QC are the unique needs of the development groups. Here, flexible experiment design coupled with parameter variations are the key informatics documentation needs. This development informatics/ELN environment enables quick technology transfer of ruggedized, automated test methods to quality operations as well as process parameters to pilot plants and manufacturing facilities. When the molecule goes into full commercial production, the informatics platform enables recursive data access supporting QbD efforts and continuous product/process improvements.

Companies can adopt an informatics approach that effectively connects the innovation and commercialization cycles with high fidelity data that retains contextual information as a project moves through R&D through pilot and into manufacturing. Scientific Innovation Lifecycle Management (SILM) supports this approach with a comprehensive, scientifically aware, informatics framework for capturing and harmonizing data along the product R&D and manufacturing/quality continuum.

Combining best-in-breed ELNs, LES, EBR and instrument integration with LIMS functionality and an interchange based on international industry standards to ERP systems, bridges the innovation productivity gap between development and commercialization, enabling successful, end-to-end tech transfer across new product development and production/QA/QC operations. Companies adopting this novel informatics IT solution will experience:

- Better decisions through optimized experimentation and sample processing with real-time results;

- Enhanced productivity through better understanding of the design space critical to Quality by Design;

- Faster time to market through shorter cycle times and reduced latencies between cycles;

- Improved compliance through automated execution and reporting;

- Effective externalization through enhanced collaboration within globalized R&D and across dispersed internal and partner-based teams.

Published in the July 2013 issue of Pharmaceutical Manufacturing magazine

ABOUT THE AUTHOR

John P. Helfrich is the Senior Director in the ADQM solutions group at Accelrys Inc. At Accelrys, Helfrich is involved in the method and process translation of R&D and QC lab test methods/SOPs to the software conventions used in the Accelrys ELN (formerly Symyx Notebook) and Accelrys Lab Execution System (formerly SmartLab ELN).

About the Author

John P. Helfrich

Sr. Director

Sign up for our eNewsletters

Get the latest news and updates